The know-how behind Meta’s synthetic intelligence mannequin, which might translate 200 completely different languages, is described in a paper printed in Nature. The mannequin expands the variety of languages that may be translated by way of machine translation.

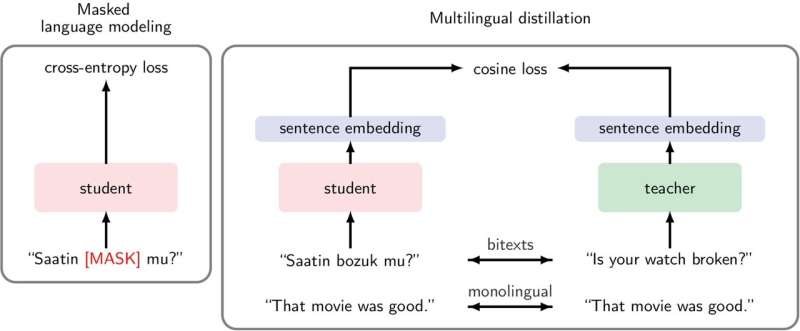

Neural machine translation fashions make the most of synthetic neural networks to translate languages. These fashions sometimes want a considerable amount of accessible information on-line to coach with, which will not be publicly, cheaply, or generally obtainable for some languages, termed “low-resource languages.” Growing a mannequin’s linguistic output when it comes to the variety of languages it interprets may negatively have an effect on the standard of the mannequin’s translations.

Marta Costa-jussà and the No Language Left Behind (NLLB) workforce have developed a cross-language strategy, which permits neural machine translation fashions to discover ways to translate low-resource languages utilizing their pre-existing capability to translate high-resource languages.

In consequence, the researchers have developed an internet multilingual translation instrument, known as NLLB-200, that features 200 languages, comprises 3 times as many low-resource languages as high-resource languages, and performs 44% higher than pre-existing programs.

On condition that the researchers solely had entry to 1,000–2,000 samples of many low-resource languages, to extend the amount of coaching information for NLLB-200 they utilized a language identification system to establish extra situations of these given dialects. The workforce additionally mined bilingual textual information from Web archives, which helped enhance the standard of translations NLLB-200 supplied.

The authors be aware that this instrument may assist individuals talking hardly ever translated languages to entry the Web and different applied sciences. Moreover, they spotlight schooling as a very vital utility, because the mannequin may assist these talking low-resource languages entry extra books and analysis articles. Nevertheless, Costa-jussà and co-authors acknowledge that mistranslations should still happen.

Extra data:

Scaling neural machine translation to 200 languages, Nature (2024). DOI: 10.1038/s41586-024-07335-x

Quotation:

Meta’s AI can translate dozens of under-resourced languages (2024, June 7)

retrieved 8 June 2024

from https://techxplore.com/information/2024-06-meta-ai-dozens-resourced-languages.html

This doc is topic to copyright. Aside from any honest dealing for the aim of personal examine or analysis, no

half could also be reproduced with out the written permission. The content material is supplied for data functions solely.