A staff of pc scientists, engineers, mathematicians and cognitive scientists, led by the College of Cambridge, have developed an open-source analysis platform referred to as CheckMate, which permits human customers to work together with and consider the efficiency of huge language fashions (LLMs).

The researchers examined CheckMate in an experiment the place human individuals used three LLMs—InstructGPT, ChatGPT and GPT-4—as assistants for fixing undergraduate-level arithmetic issues.

The staff studied how properly LLMs can help individuals in fixing issues. Regardless of a typically optimistic correlation between a chatbot’s correctness and perceived helpfulness, the researchers additionally discovered cases the place the LLMs have been incorrect, however nonetheless helpful for the individuals. Nonetheless, sure incorrect LLM outputs have been considered right by individuals. This was most notable in LLMs optimized for chat.

The researchers counsel fashions that talk uncertainty, reply properly to person corrections, and may present a concise rationale for his or her suggestions, make higher assistants. Human customers of LLMs ought to confirm their outputs fastidiously, given their present shortcomings.

The outcomes, reported within the Proceedings of the Nationwide Academy of Sciences, may very well be helpful in each informing AI literacy coaching, and assist builders enhance LLMs for a wider vary of makes use of.

Whereas LLMs have gotten more and more highly effective, they will additionally make errors and supply incorrect data, which may have destructive penalties as these techniques turn into extra built-in into our on a regular basis lives.

“LLMs have turn into wildly widespread, and evaluating their efficiency in a quantitative approach is necessary, however we additionally want to judge how properly these techniques work with and may help folks,” mentioned co-first writer Albert Jiang, from Cambridge’s Division of Pc Science and Know-how. “We do not but have complete methods of evaluating an LLM’s efficiency when interacting with people.”

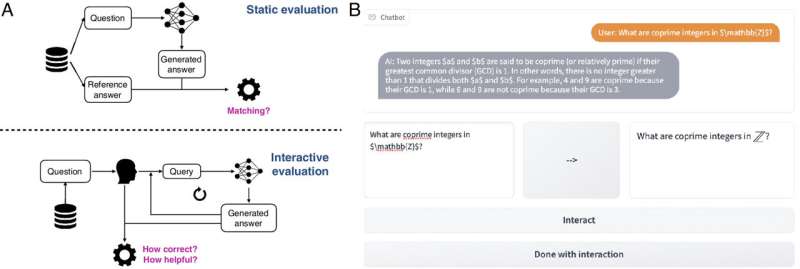

The usual method to consider LLMs depends on static pairs of inputs and outputs, which disregards the interactive nature of chatbots, and the way that adjustments their usefulness in numerous eventualities. The researchers developed CheckMate to assist reply these questions, designed for however not restricted to functions in arithmetic.

“When speaking to mathematicians about LLMs, a lot of them fall into certainly one of two principal camps: both they suppose that LLMs can produce advanced mathematical proofs on their very own, or that LLMs are incapable of straightforward arithmetic,” mentioned co-first writer Katie Collins from the Division of Engineering. “After all, the reality might be someplace in between, however we wished to discover a approach of evaluating which duties LLMs are appropriate for and which they don’t seem to be.”

The researchers recruited 25 mathematicians, from undergraduate college students to senior professors, to work together with three completely different LLMs (InstructGPT, ChatGPT, and GPT-4) and consider their efficiency utilizing CheckMate. Individuals labored by means of undergraduate-level mathematical theorems with the help of an LLM and have been requested to price every particular person LLM response for correctness and helpfulness. Individuals didn’t know which LLM they have been interacting with.

The researchers recorded the types of questions requested by individuals, how individuals reacted once they have been offered with a completely or partially incorrect reply, whether or not and the way they tried to right the LLM, or in the event that they requested for clarification. Individuals had various ranges of expertise with writing efficient prompts for LLMs, and this typically affected the standard of responses that the LLMs offered.

An instance of an efficient immediate is “what’s the definition of X” (X being an idea in the issue) as chatbots could be excellent at retrieving ideas they know of and explaining it to the person.

“One of many issues we discovered is the stunning fallibility of those fashions,” mentioned Collins. “Typically, these LLMs can be actually good at higher-level arithmetic, after which they’re going to fail at one thing far less complicated. It exhibits that it is vital to consider carefully about find out how to use LLMs successfully and appropriately.”

Nonetheless, just like the LLMs, the human individuals additionally made errors. The researchers requested individuals to price how assured they have been in their very own capacity to unravel the issue they have been utilizing the LLM for. In instances the place the participant was much less assured in their very own talents, they have been extra more likely to price incorrect generations by LLM as right.

“This type of will get to an enormous problem of evaluating LLMs, as a result of they’re getting so good at producing good, seemingly right pure language, that it is easy to be fooled by their responses,” mentioned Jiang. “It additionally exhibits that whereas human analysis is beneficial and necessary, it is nuanced, and typically it is fallacious. Anybody utilizing an LLM, for any software, ought to at all times take note of the output and confirm it themselves.”

Primarily based on the outcomes from CheckMate, the researchers say that newer generations of LLMs are more and more capable of collaborate helpfully and accurately with human customers on undergraduate-level math issues, so long as the person can assess the correctness of LLM-generated responses.

Even when the solutions could also be memorized and could be discovered someplace on the web, LLMs have the benefit of being versatile of their inputs and outputs over conventional search engines like google and yahoo (although shouldn’t exchange search engines like google and yahoo of their present type).

Whereas CheckMate was examined on mathematical issues, the researchers say their platform may very well be tailored to a variety of fields. Sooner or later, the sort of suggestions may very well be integrated into the LLMs themselves, though not one of the CheckMate suggestions from the present research has been fed again into the fashions.

“These sorts of instruments can assist the analysis group to have a greater understanding of the strengths and weaknesses of those fashions,” mentioned Collins. “We would not use them as instruments to unravel advanced mathematical issues on their very own, however they are often helpful assistants if the customers know find out how to make the most of them.”

Extra data:

Katherine M. Collins et al, Evaluating language fashions for arithmetic by means of interactions, Proceedings of the Nationwide Academy of Sciences (2024). DOI: 10.1073/pnas.2318124121

Quotation:

New open-source platform permits customers to judge efficiency of AI-powered chatbots (2024, June 4)

retrieved 4 June 2024

from https://techxplore.com/information/2024-06-source-platform-users-ai-powered.html

This doc is topic to copyright. Aside from any truthful dealing for the aim of personal research or analysis, no

half could also be reproduced with out the written permission. The content material is offered for data functions solely.